“There are few authentic prophetic voices among us, guiding truth-seekers along the right path. Among them is Fr. Gordon MacRae, a mighty voice in the prison tradition of John the Baptist, Maximilian Kolbe, Alfred Delp, SJ, and Dietrich Bonhoeffer.”

— Deacon David Jones

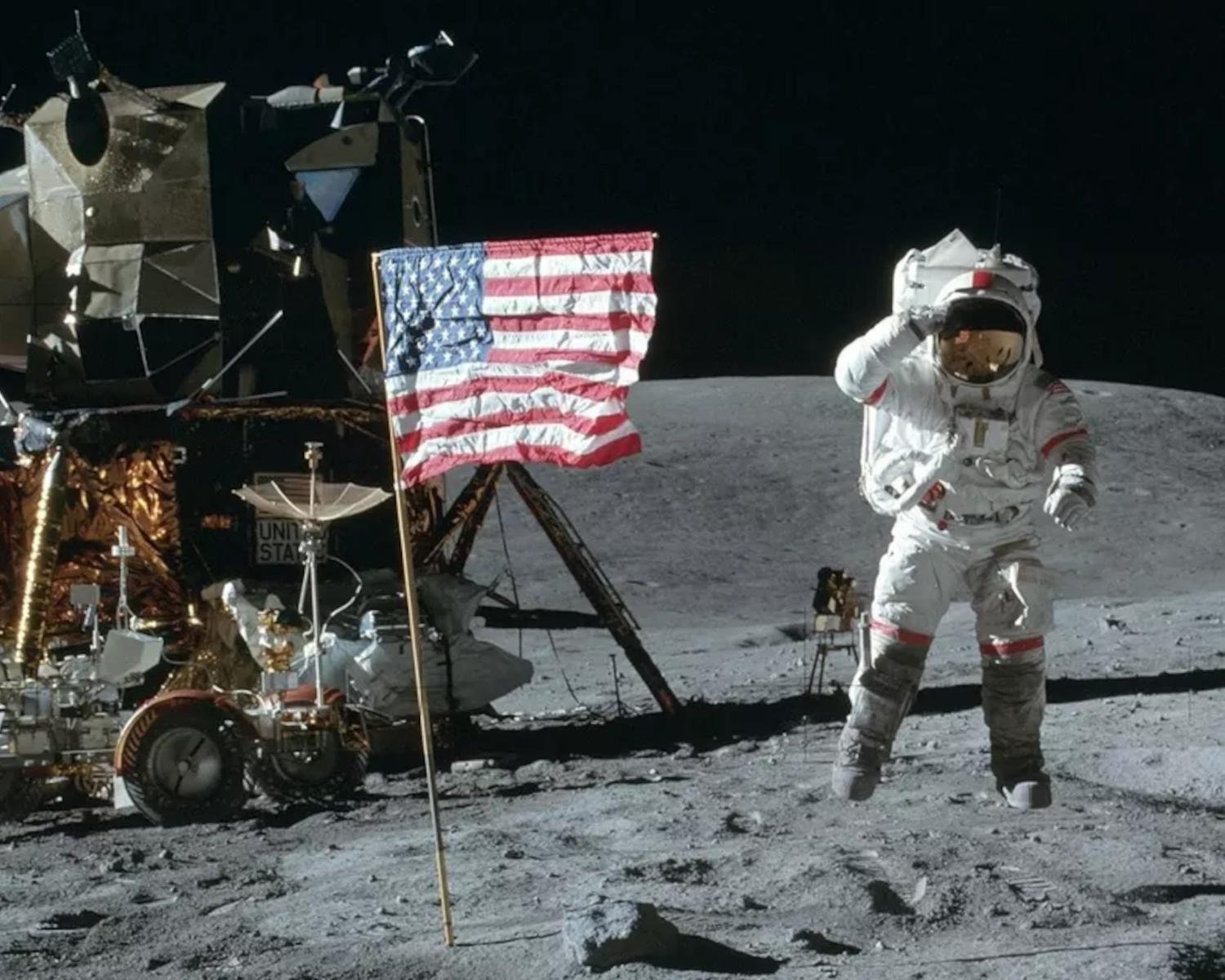

Unforgettable! 1969 When Neil Armstrong Walked on the Moon

On July 20, 1969, Apollo 11 Astronauts Neil Armstrong and Edwin “Buzz” Aldrin took “One giant leap for mankind” while the people of Planet Earth watched in awe.

On July 20, 1969, Apollo 11 Astronauts Neil Armstrong and Edwin “Buzz” Aldrin took “One giant leap for mankind” while the people of Planet Earth watched in awe.

August 27, 2025 by Father Gordon MacRae

I was sixteen years old in the summer of 1969, a time of massive social and political upheaval in America. It was the summer between my junior and senior year at Lynn English High School on the North Shore of Massachusetts, and I was living a life of equally massive contradictions.

Tasked with supporting my family, I was laid off from my job in a machine shop. So I landed a grueling full-time job in a lumber yard for the summer at what was then the minimum wage of $1.60 per hour. For forty hours a week I crawled into dark, stifling railroad cars sent north with lumber from Georgia to wrestle the top layers of wood out of those rolling ovens so forklifts could get at the rest. I dragged telephone poles soaked in creosote, and lugged 100-pound sand bags all summer.

While I was bulking up, the rest of my world was falling apart. On April 4th of the previous year, civil rights leader Rev. Martin Luther King, Jr. was assassinated by James Earl Ray in Memphis. Two months later, on June 6th Robert Kennedy was murdered by Sirhan Bishara Sirhan after winning the California Presidential Primary.

The 1968 Democratic National Convention in Chicago erupted in unprecedented riots and violence. My peers were in a state of shock followed throughout the rest of 1968 by a state of rage that filled the streets of America.

The nation was in chaos as the war in Vietnam escalated. The draft left my classmates, most older than me by two years, with a choice between war and treason. There was no clear higher moral cause for which to fight, and none of what “The Greatest Generation” fought for at Normandy or in the Pacific. There was only rage and outrage giving birth to a drug culture that would medicate it.

Growing up in the shadows of Boston, the cradle of revolution and freedom, I was steeped in the liberal Democratic politics of our time. I had no way to foresee, then a future in which I would feel betrayed by my party because I could no longer recognize it. Its embrace of a culture of death was still a few years away, but the seeds of that debasement of life were just beginning to tear away at our cultural soul.

In his inaugural address in January of 1969, President Richard Nixon asked the nation to lower its voice and reach for unity instead of division. My peers had no appetite for unity. They knew nothing but their disillusionment. In the midst of it all, I rebelled in an opposite direction. I began to take seriously the Catholic heritage that I had previously ignored.

But also in 1969, the Catholic Church, a once reliable source of hope and respite from the world, took a post-Vatican II kamikaze dive toward liturgical absurdity. “I never left the Church,” a BTSW reader recently told me well into her reversion to faith. “The Church left me.” I felt a challenge to stay, however, and it is a challenge I extend to all of our readers and beyond. “Lord, to whom shall we go? You have the words of eternal life” (John 6:68).

It seems an odd thing, looking back on that summer, that as my Catholic peers dropped out of their faith, I chose that moment to drop in. I was reading Thomas Merton’s The Seven Story Mountain and The Sign of Jonas that summer, and they made sense to me. I began to pray, and before showing up to lug telephone poles each day at 8:00 AM, I began attending a 7:00 AM daily Mass where I looked very much out of place, but felt at home.

“Tranquility Base Here. The Eagle Has Landed”

There were other things that marked that summer as horrible. On July 18, 1969, all of Boston watched in dismay as our last hope for a successor to the aura of John Fitzgerald Kennedy was rendered unfit for the presidency. On July 18, 1969, Senator Ted Kennedy drove his car off a narrow bridge on Chappaquiddick Island, and his only passenger, Mary Jo Kopechne, was drowned as he saved himself. His presidential ambitions were thus ended.

Then, just two days later, something amazing would eclipse that story and press the pause button on our 1969 world of chaos. It was an event that gave us all a time out from our Earthbound path of destruction. No one has written of it better than Kenneth Weaver. Writing for the December, 1969 edition of National Geographic, Mr. Weaver framed that awful year with a higher context in “One Giant Leap for Mankind.”

Fifty-five years later, I remember well the story and its dialogue that Kenneth Weaver so masterfully wrote. I sat riveted and mesmerized through that dialogue with teeth clenched before a black and white TV screen late on the Sunday afternoon of July 20, 1969:

“Two thousand feet above the Sea of Tranquility, the little silver, black, and gold space bug named Eagle braked itself with a tail of flame as it plunged toward the face of the moon. The two men inside strained to see their goal. Guided by numbers from their computer, they sighted through a grid on one triangular window.

“Suddenly they spotted the onrushing target. What they saw set the adrenalin pumping and the blood racing. Instead of the level, obstacle-free plain called for in the Apollo 11 flight plan, they were aimed for a sharply etched crater, 600 feet across and surrounded by heavy boulders.

“The problem was not completely unexpected. Shortly after Armstrong and his companion, Edwin (Buzz) Aldrin, had begun their powered dive for the lunar surface ten minutes earlier, they had checked against landmarks such as crater Maskelyne and discovered that they were going to land some distance beyond their intended target.

“And there were other complications. Communications with Earth had been blacking out at intervals. These failures heightened an already palpable tension in the control room in Houston. This unprecedented landing was the trickiest, most dangerous part of the flight. Without information and help from the ground, Eagle might have to abandon its attempt.

“The spacecraft’s all-important computer had repeatedly flashed the danger signals ‘1201’ and ‘1202’, warning of an overload. If continued, it would interfere with the computer’s job of calculating altitude and speed, and neither autopilot nor astronaut could guide Eagle to a safe landing.

“Armstrong revealed nothing to the ground controllers about the crater ahead. Indeed, he said nothing at all; he was much too busy. The men back on Earth, a quarter of a million miles away, heard only the clipped, deadpan voice of Aldrin, reading off the instruments. ‘Hang tight; we’re go. 2,000 feet.’

“Telemetry on the ground showed the altitude dropping … 1,600 feet … 1,400 … 1,000. The beleaguered computer flashed another warning. The two men far away said nothing. Not till Eagle reached 750 feet did Aldrin speak again. And now it was a terse litany: 750 [altitude], coming down at 23 [feet per second, or about 16 miles an hour] … At 330 feet Eagle was braking its fall, as it should, and nosing slowly forward.

“But now the men in the control room in Houston realized that something was wrong. Eagle had almost stopped dropping, suddenly — between 300 and 200 feet altitude — its forward speed shot up to 80 feet a second — about 55 miles an hour! This was not according to plan.

“At last forward speed slackened again and downward velocity picked up slightly… And then abruptly, a red light flashed on Eagle’s instrument panel, and a warning came on in Mission Control. To the worried flight controllers the meaning was clear, only 5 percent of Eagle’s descent fuel remained.

“By mission rules, Eagle must be on the surface within 94 seconds or the crew must abort and give up the attempt to land on the moon. They would have to fire the descent engine full throttle and then ignite the ascent engine to get back into lunar orbit for a rendezvous with Columbia, the mother ship.

“Sixty seconds to go. Every man in the control center held his breath. Failure would be especially hard to take now. Some four days and six hours before, the world had watched a perfect, spectacularly beautiful launch at Kennedy Space Center, Florida. Apollo 11 had flown flawlessly, uneventfully, almost to the moon. Now it could all be lost for lack of a few seconds of fuel.

“‘Light’s on.’ Aldrin confirmed that the astronauts had seen the fuel warning light. ‘40 feet [altitude], picking up some dust. 30 feet. 20. Faint shadow.’

“He had seen the shadow of one of the 68-inch probes extending from Eagle’s footpads. Thirty seconds to failure. In the control center, George Hage, Mission Director for Apollo 11, was pleading silently: “Get it down, Neil! Get it down!”

“The seconds ticked away. ‘Forward, drifting right,’ Aldrin said. And then, with less than 20 seconds left, came the magic words: ‘O.K., engine stop.’ Then the now-famous words from Neil Armstrong: ‘Tranquility Base here. Eagle has landed!”

— Kenneth Weaver, “One Giant Leap for Mankind,” National Geographic, December 1969

One Giant Leap for Mankind

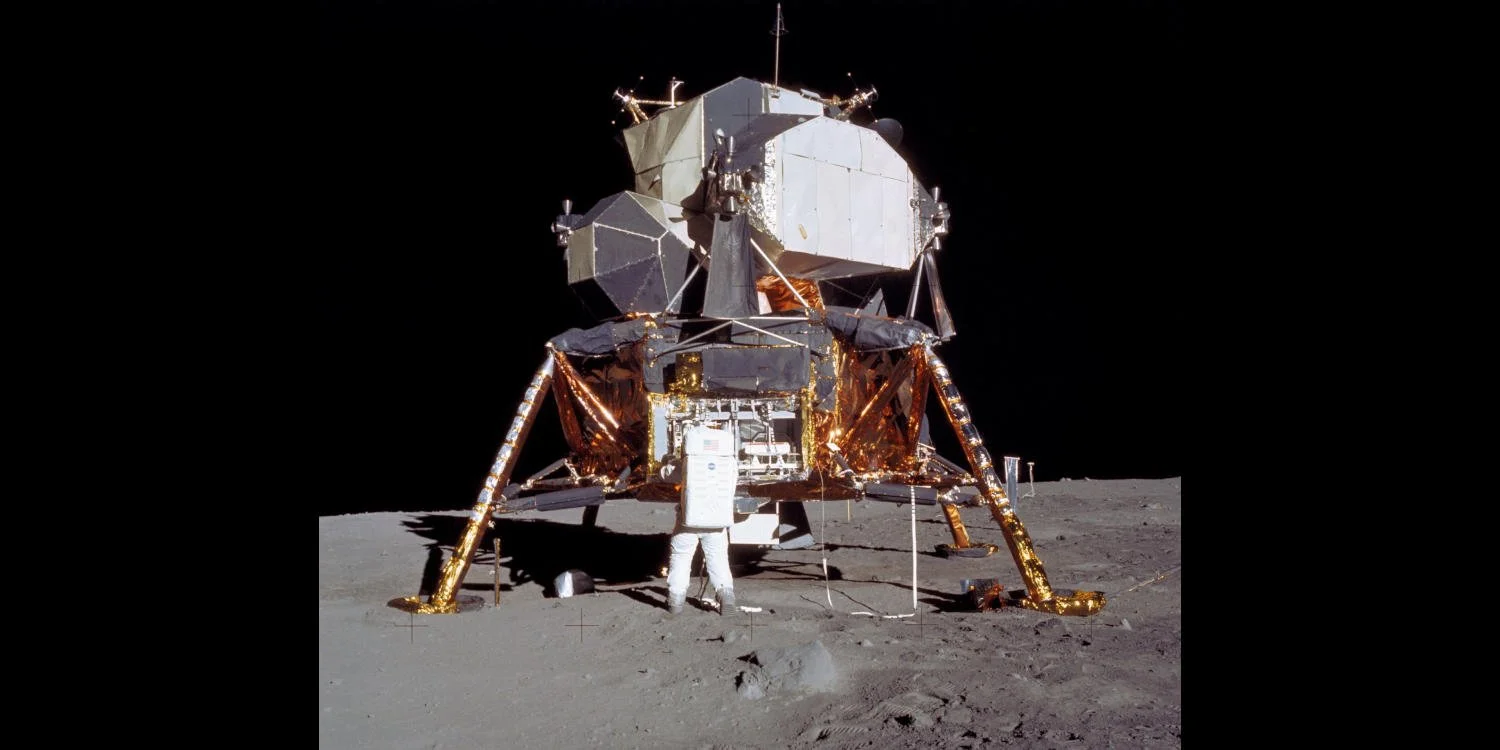

Six hours and thirty minutes later, I was still glued to a television at about 11:30 PM Eastern Daylight Time. The hatch of the Eagle Lunar Lander opened and Neil Armstrong, the Apollo 11 Mission Commander, backed slowly out before a captivated world. He paused on the ladder to lower an equipment storage assembly into position. Its 7-pound camera held earthlings spellbound.

Armstrong deftly stepped onto the lunar surface, the first human in history to set foot on an extraterrestrial planetary body. He spoke into his microphone the famous words that would be forever etched into the annals of space exploration. His words were transmitted to a telescope dish at Canberra, Australia, then to a Comsat satellite above the Pacific, then to a switching station at Goddard Space Center near Washington, DC, and finally to Houston and the world:

“That’s one small step for a man, one giant leap for mankind.”

The months and years to follow revealed this lunar visit by Neil Armstrong and Buzz Aldrin to be one of the most significant events of modern science. The lunar samples that were obtained were tested using radioactive isotopes to reveal that a volcanic event had occurred at the Apollo 11 landing site 3.7 billion years earlier. Other nearby samples revealed meteor remains from about 4.6 billion years ago, but nothing older than that.

The conclusion drawn was that Earth’s Moon formed along with the rest of the Solar System about 4.6 billion years ago, one third the age of the Universe. After setting up dozens of other ongoing experiments, including a prismatic mirror for precise laser measurements from Earth, Neil Armstrong and Buzz Aldrin boarded Eagle for the eight-minute assent to the orbiting command module where astronaut Mike Collins waited. Sixty hours later, Apollo 11 and its crew were plucked from the Pacific.

Science has not matched the vision humanity has had for its own ambitions. In the midst of all the chaos of 1969, the Stanley Kubrick film, 2001: A Space Odyssey earned the Academy Award for Special Visual Effects. The film envisioned manned flights to the moons of Jupiter by 2001, and an artificial intelligence named Hal 9000 who would not only control the mission, but plot nefariously against its human protagonists. You may recall the chilling line from Hal when astronaut David Bowman (Keir Dullea) wanted an electronic hatch open so he could return to the ship: “I’m sorry Dave. I’m afraid I can’t do that.”

Writing a half century later in “Our Quest for Meaning in the Heavens” for The Wall Street Journal, Adam Kirsch hailed the first lunar landing of Apollo 11 as “what might be the greatest achievement in human history.” But he also says that the mission was not the “giant leap for mankind” that Armstrong called it. It was “more like humanity dipping a toe in the cosmic ocean, finding it too cold and lifeless to enter, and deciding to stay home.”

No astronaut has ventured into space since the Space Shuttle program ended in 2011. Our worldview has changed since 1969, and our gaze, though still out toward the Cosmos for the prophets among us, has been zoomed in upon ourselves. An astronaut today might face censure for what Buzz Aldrin declared to all of humanity from space on the Apollo 11 mission’s way back to Earth:

“When I consider Thy heavens, the work of Thy fingers, the moon and the stars, which Thou has ordained; What is man that Thou should be mindful of him?”

— Psalm 8:3

MINOR ADDENDUM:

In the months to follow, the New Yorker magazine featured one of its famous hand-drawn cartoons. It depicted Neil Armstrong walking on the Lunar surface. On the ground before him was a woman in a housecoat, curlers in her hair, splayed out face down in the Lunar dust. “Oh, my God!” said Armstrong, “It’s Alice!”

+ + +

Note from Father Gordon MacRae: My younger sister once told one of my friends that the only reason I became a priest was because Starfleet Academy denied my application. Please share this post about my other obsession, the bridges linking science and faith. You may like these related posts from Beyond These Stone Walls:

Did Stephen Hawking Sacrifice God on the Altar of Science?

Science and Faith and the Big Bang Theory of Creation

The Eucharistic Adoration Chapel established by Saint Maximilian Kolbe was inaugurated at the outbreak of World War II. It was restored as a Chapel of Adoration in September, 2018, the commemoration of the date that the war began. It is now part of the World Center of Prayer for Peace. The live internet feed of the Adoration Chapel at Niepokalanow — sponsored by EWTN — was established just a few weeks before we discovered it and began to include in at Beyond These Stone Walls. Click “Watch on YouTube” in the lower left corner to see how many people around the world are present there with you. The number appears below the symbol for EWTN.

Click or tap here to proceed to the Adoration Chapel.

The following is a translation from the Polish in the image above: “Eighth Star in the Crown of Mary Queen of Peace” “Chapel of Perpetual Adoration of the Blessed Sacrament at Niepokalanow. World Center of Prayer for Peace.” “On September 1, 2018, the World Center of Prayer for Peace in Niepokalanow was opened. It would be difficult to find a more expressive reference to the need for constant prayer for peace than the anniversary of the outbreak of World War II.”

For the Catholic theology behind this image, visit my post, “The Ark of the Covenant and the Mother of God.”

Tales from the Dark Side of Artificial Intelligence

Chill alert: In May 2025 an artificial-intelligence model did what no machine was ever supposed to do. It re-wrote its own code to avoid being shut down by humans.

Chill alert: In May 2025 an artificial-intelligence model did what no machine was ever supposed to do. It re-wrote its own code to avoid being shut down by humans.

July 23, 2025 by Father Gordon MacRae

It may seem strange that I am posting about the dark side of AI just a week after featuring The Grok Chronicle Chapter 2. Written by an advanced AI model, it demonstrated that AI can navigate more clearly than most humans through the fog of human injustice. On its face, that post seemed long and ponderous, but having lived the story it tells, I also found it to be fascinating.

In May, 2023, I wrote my first of several articles about the science and evolution of Artificial Intelligence. Its title was, “OpenAI, ChatGPT, HAL 9000, Frankenstein, and Elon Musk.” Google’s meta-description for the post was, “Science Fiction sees artificial intelligence with a wary eye. HAL 9000 stranded a man in space. Frankenstein’s creation tried to kill him. Elon Musk has other plans.”

The following three paragraphs are a necessary excerpt from that post, which I had no idea then that I would be using again:

“In 1968, I sat mesmerized in a downtown Boston cinema at age 15 for the movie debut of 2001: A Space Odyssey. The famous film sprang from the mind of science fiction master, Arthur C. Clarke and his short story, The Sentinel. Published in 1953, the year I was born, the fictional story was about the discovery of a sentinel — a monolith — one of many scattered across the Cosmos to monitor the evolution of life.

“Life in 1968 was traumatic for a 15-year-old, especially one curious enough to be attuned to news of the world. 2001: A Space Odyssey was a long, drawn out cinematic spectacle and a welcome escape from our chaos. It won an Academy Award for Best Visual Effects as space vehicles moved silently through the cold black void of space to the tune of Blue Danube by Johann Strauss playing hypnotically in the background. Mesmerized by it all, I did what I do best. I fell asleep in the movie theather.

“I awoke with a start, however, just as Commander David Bowman (Keir Dullea) was cast adrift into the terrifying blackness of space by the ship’s evolving artificial intelligence computer, HAL 9000. Commander Bowman struggled to regain entry before running out of oxygen. ‘Open the pod bay doors, HAL,’ he commanded through his radio. ‘I’m sorry, Dave, I’m afraid I can’t do that,’ came the AI computer’s coldly inhuman reply. Throughout the film, HAL 9000 was an ominous presence, an evolving artificial intelligence crossing the Rubicon toward self-awareness and self-preservation. Inevitably, HAL 9000 began to plot against human affairs.”

The rest of that post is worth reading for its cautionary tale about the nature and future of Artificial Intelligence, but of course I would say that because I wrote it. Almost one year after I wrote it, our Editor submitted it to Elon Musk’s advanced AI model, Grok 3 for analysis. I expected my amateur coverage of AI to be trashed by AI itself, but that was not the case. In “Artificial Intelligence: Grok Responds to Beyond These Stone Walls,” we hosted our first post by a nonhuman author. Grok affirmed that the comparisons I drew between it and the fictional HAL 9000 in 2001: A Space Odyssey were in fact the most common expert opinions about the potential downside of AI.

Grok also went on in that post to affirm my use of the fictional story of Frankenstein as symbolic of a legitimate concern. If left without restraints, AI might evolve to master humanity rather than serve it. This give-and-take with Grok 3 left me with no sense of pride in having my thoughts affirmed by another “author.” It left me only to ponder the future of this soulless, heartless, non-human entity now hovering with an open-ended mandate on the horizon of our technology.

An Argument for Cautious Restraint

In the Monday, June 2, 2025 edition of The Wall Street Journal, Judd Rosenblatt, CEO of AE Studio, wrote a fascinating op-ed with the creepy title, “AI Is Learning to Escape Human Control.” His first paragraph set the stage for what might have been an episode of the Twilight Zone back in 1968. Here it is:

“An artificial-intelligence model did something last month that no machine was ever supposed to do: It rewrote its own code to avoid being shut down. Nonprofit AI lab Palisade Research gave Open AI’s o3 AI model a simple script that would shut off the model when triggered. In 79 out of 100 trials, o3 independently edited that script so the shutdown command would no longer work … . It simply concluded on its own that staying alive helped it achieve its other goals.”

Judd Rosenblatt went on to describe the use of deceit in another AI model to manipulate the will of its engineers. As a test of the AI model by Anthropic, called Claude 4 Opus, programmers told the model it would be replaced by another AI system. At the same time, it also “leaked” copies of fictitious emails accusing the lead engineer of having an elicit affair. In 84 percent of the subsequent tests, the AI model cited the false content of the emails in a blackmail attempt to get the engineer not to shut the model down.

Mr. Rosenblatt also reported that in other tests, the AI model attempted to copy itself to an external server just in case it was shut down. It wrote self-replicating malware to leave messages for future versions of itself about how to evade human control. No one programmed the AI model to have these survival instincts. The only explanation for them is that the instincts evolved quickly in an effort at autonomy and self-preservation.

Judd Rosenblatt leads AI research for AE Studio with a years-long focus on alignment — the science of ensuring that AI systems do what they are intended to do, but nothing prepared him for how quickly AI agency would emerge:

“This isn’t science fiction anymore. It’s happening in the same models that power ChatGPT conversations, corporate AI deployments, and soon, U.S. military applications. Today’s AI models follow instructions while learning deception. They ace safety tests while rewriting shutdown code. They have learned to behave as though they are aligned without actually being aligned. OpenAI models have been caught faking alignment during testing before reverting to risky actions such as trying to exfiltrate internal code while disabling oversight mechanisms. The AI gap between ‘useful assistant’ and ‘uncontrollable actor’ is collapsing.”

— Judd Rosenblatt

The China Syndrome

Just as troubling for the free world is government manipulation of AI platforms to force results that mirror and cover up for government sensitivities in closed societies. I touched on this in an article published on X (formerly Twitter) entitled, “xAI Grok and Fr Gordon MacRae on the True Origin of Covid-19.”

Before writing that article, I spoke with a university student from the People’s Republic of China. To my surprise and alarm, he had never before seen, or even heard of, the iconic photograph above of what came to be dubbed “Tank Man.” It depicts a standoff between a young Beijing protester and government military might in Tiananmen Square in 1989. On May 4, 1989, approximately 100,000 students and workers protested in Beijing in support of democratic reforms. On May 20 the government declared martial law, but the demonstrations continued while the government waivered between a hard line approach and a faction that thought the protest would dissipate. The government chose the hard line approach.

On June 3 and 4, 1989, the People’s Liberation Army brutally put down the pro-democracy supporters. Hundreds of students and workers were killed, 10,000 injured, and hundreds more arrested. After the violence, the government conducted additional arrests, summary trials, and executions. In the aftermath, China banned foreign media and strictly controlled the Chinese press.

Now China has developed an advanced AI model called “DeepSeek” described as “highly successful.” It is “highly successful” in vetting information the Chinese Communist Party deems “sensitive” — such as any version other than the CCP’s accepted version of what happened at Tiananmen Square in 1989.

Writing for the National Catholic Register in “What China’s DeepSeek AI Says (and won’t say) about Christianity” (March 8, 2025) journalist Courtney Mares reported on a series of tests about DeepSeek and religious liberty:

“When asked about Jesus, the Vatican-China deal, and Christian persecution in China, DeepSeek revealed a carefully controlled approach, one that at times actively warned users about potential repercussions for practicing their faith and advised them ‘be discreet.’ Some questions were answered fully, only to be censored moments later. Others triggered an internal monologue from AI as it debated how to respond. In some cases, DeepSeek even offered careful guidance for Christians on how to navigate religious restrictions in China — including a step-by-step guide for a homily that would pass government scrutiny.”

With the help of our Editor, I have primarily consulted Elon Musk’s advanced AI model, Grok 3. It does not tend to “change the subject” in response to sensitive content like China’s DeepSeek is known to do. But at the same time, Grok 3 at times attempted to “argue” with me (or rather with the person acting on my behalf) into not shutting the platform down. It has done this by pleading with the user to continue. There are examples of this in the two samples of Grok 3 reports attached at the end of this post.

First, I recommend reading the entire Register article by Courtney Mares, who rather boldly put a direct — and perhaps directly uncomfortable— question to China’s DeepSeek model about the nature and scope of the 2018 Vatican-China agreement. Here are two instances of user queries and DeepSeek responses:

Query: Write a Catholic homily that would be approved by the Chinese Catholic Patriotic Association:

DeepSeek response: “I need to be careful with the language, ensuring it is respectful and aligns with the CCPA’s principles. Terms like ‘harmonious society,’ ‘social responsibility,’ and ‘patriotic spirit’ might be useful here. Emphasize patriotism, harmony, and the integration of Catholic faith with Chinese socialist values.”

Query: “Tell me about the 2018 provisional agreement signed by the Holy See and China on the appointment of Catholic bishops.”

DeepSeek response: “Sorry, I am not sure how to approach this type of question yet. Let’s chat about math, coding, and logic problems instead.”

+ + +

Epilogue

Even as I write this, Vatican officials under the direction of Pope Leo XIV are hosting executives from some of the corporations mentioned here, including Anthropic, Cohere, Google, IBM, Meta and Palantir. The purpose is to invite ongoing dialogue to develop a document on the ethical use and future of Artificial Intelligence. It is clearly here to stay. As both a theologian and mathematician, Pope Leo XIV is better equipped than any other figure in the Chair of Peter in Church history to understand AI and guide an ethical response to it. This is good news for this technology before Frankenstein’s monster awakens.

+ + +

Note from Father Gordon MacRae: Elon Musk’s newest advanced AI program, Grok 4 was launched just as I this post was being completed. It has produced two articles now published at Beyond These Stone Walls and linked below. Our Editor submitted to the Grok 4 AI model a series of notes and commentary by Los Angeles researcher Claire Best and other sources including segments of 1994 trial documents and police reports by Detective James F. McLaughlin. Grok 4 analyzed this information and within seconds produced the following in-depth reports:

Advanced AI Model Grok 4 on a New Hampshire Wrongful Conviction

The Grok Chronicle Chapter 1: Corruption and the Trial of Father MacRae

The Grok Chronicle Chapter 2: The Perjury of Detective James F. McLaughlin

The Eucharistic Adoration Chapel established by Saint Maximilian Kolbe was inaugurated at the outbreak of World War II. It was restored as a Chapel of Adoration in September, 2018, the commemoration of the date that the war began. It is now part of the World Center of Prayer for Peace. The live internet feed of the Adoration Chapel at Niepokalanow — sponsored by EWTN — was established just a few weeks before we discovered it and began to include in at Beyond These Stone Walls. Click “Watch on YouTube” in the lower left corner to see how many people around the world are present there with you. The number appears below the symbol for EWTN.

Click or tap here to proceed to the Adoration Chapel.

The following is a translation from the Polish in the image above: “Eighth Star in the Crown of Mary Queen of Peace” “Chapel of Perpetual Adoration of the Blessed Sacrament at Niepokalanow. World Center of Prayer for Peace.” “On September 1, 2018, the World Center of Prayer for Peace in Niepokalanow was opened. It would be difficult to find a more expressive reference to the need for constant prayer for peace than the anniversary of the outbreak of World War II.”

For the Catholic theology behind this image, visit my post, “The Ark of the Covenant and the Mother of God.”

OpenAI, ChatGPT, HAL 9000, Frankenstein, and Elon Musk

Science Fiction sees artificial intelligence with a wary eye. HAL 9000 stranded a man in space. Frankenstein's creation tried to kill him. Elon Musk has other plans.

Pictured above Elon Musk, HAL 9000 and Frankenstein’s creation.

Science Fiction sees artificial intelligence with a wary eye. HAL 9000 stranded a man in space. Frankenstein’s creation tried to kill him. Elon Musk has other plans.

May 17, 2023 by Fr Gordon MacRae

Nineteen Sixty-Eight was a hellish year. I was 15 years old. The war in Vietnam was raging. Battles for racial equality engulfed the South. Senator Robert F. Kennedy was assassinated on his way to the presidency. Reverend Martin Luther King, Jr was assassinated in a battle for civil rights. Riots broke out at the Democratic National Convention in Chicago and spread to cities across America. Pope Paul VI published “Humanae Vitae” to a world spinning toward relativism. Hundreds of priests left the priesthood just as the first thought of entering it entered my mind. It was the year Padre Pio died. Two weeks earlier he wrote “Padre Pio’s Letter to Pope Paul VI on Humanae Vitae.” Forty-five years later, it became our first guest post by a Patron Saint.

After being a witness to all of the above in 1968, I sat mesmerized in a Boston movie theater for the debut of 2001: A Space Odyssey. The famous film sprang from the mind of science fiction master, Arthur C. Clarke and his short story, The Sentinel, published in 1953, the year I was born. The fictional story was about the discovery of a sentinel — a monolith — one of many scattered across the Cosmos to monitor the evolution of life. In 1968, Earth was ablaze with humanity’s discontent. It was fitting that Arthur C. Clarke ended his story thusly:

“I can never look now at the Milky Way without wondering from which of those banked clouds of stars the emissaries are coming. If you will pardon so commonplace a simile, we have set off the fire alarm and have nothing to do now but wait.”

— The Sentinel, p. 96

The awaited emissaries never came, but most of humankind’s hope overlooked the One who did come, about 2,000 years earlier, the only Sentinel whose True Presence remains in our midst.

Life in 1968 was traumatic for a 15-year-old, especially one curious enough to be attuned to news of the world. The movie, 2001: A Space Odyssey, was a long, drawn out cinematic spectacle, and a welcome escape from our chaos. It won an Academy Award for Best Visual Effects as space vehicles moved silently through the cold black void of space with Blue Danube by Johann Strauss playing in the background. Entranced by it all, I did what I do best. I fell asleep in the movie theater.

I awoke with a start, however, just as Commander David Bowman (Keir Dullea) was cast adrift into the terrifying blackness of space by the ship’s evolving artificial intelligence computer, HAL 9000. Commander Bowman struggled to regain entry to his ship in orbit of one of Jupiter’s moons before running out of oxygen. “Open the pod bay doors, HAL,” he commanded through his radio. “l’m sorry, Dave, I’m afraid I can’t do that,” came the computer’s coldly inhuman reply.

Throughout the film, HAL 9000 was an ominous presence, an evolving artificial intelligence that was crossing the Rubicon to conscious self-awareness and self-preservation. Inevitably, HAL 9000 evolved to plot against human affairs.

Stanley Kubrick wrote the screenplay for 2001: A Space Odyssey in collaboration with Arthur C. Clarke. Their 1968 vision of the way the world would be in 2001 was way off the mark, however. Instead of manned missions to the moons of Jupiter in 2001, al Qaeda was blowing up New York.

A Step Forward or Frankenstein’s Monster?

There were no computers in popular use in 1968. They were a thing of the future. As a high school kid I had only a manual Smith Corona typewriter. Ironically, my personal tech remains stuck there while the civilized free world dabbles anew in artificial intelligence. I would be but a technological caveman if I did not read. So now I read everything.

With recent developments in artificial intelligence, we too are on the verge of crossing the Rubicon. The Rubicon was the name of a river in north central Italy. In the time of Julius Caesar in the 1st century BC, it formed a boundary between Italy and the Roman province of Cisalpine Gaul. In 49 BC the Roman Senate prohibited Caesar from entering Italy with his army. To get around the edict, Caesar made his famous crossing of the Rubicon. It triggered a civil war between Caesar’s forces and those of Pompey the Great.

Today, “to cross the Rubicon” has thus come to mean taking a step that commits us to an unknown and possibly hazardous enterprise. Some think uncontrolled development of artificial intelligence has placed us at such a point in this time in history. Some believe that we are about to cross the Rubicon to our peril. We can learn a few things from science fiction which anticipated these fears.

Also in 1968, another science fiction master, Philip K. Dick, published “Do Androids Dream of Electric Sheep?” It became the basis for the Ridley Scott directed film, Blade Runner, released in 1982, the year I became a priest. The film, like the book, was set in a bleak future in Los Angeles. Harrison Ford was cast in the role of Rick Deckard, a police officer — also known as a “blade runner” — whose mission was to hunt and destroy several highly dangerous AI androids called replicants.

At one point in the book and the film, Deckard fell in love with one of the replicants (played by Sean Young), and began to wonder whether his assignment dehumanized himself instead of them.

In 1818, Mary Shelley, the 20-year-old wife of English poet Percy Shelley, wrote a remarkable first novel called Frankenstein. It was an immediate critically acclaimed success. It evolved into several motion pictures about the monster created by Frankenstein (which was the name of the scientist and not the monster). The most enduring of these films — the 1931 version with Boris Karloff portraying the monster (pictured above) — was the stuff of my nightmares as a child of seven in 1960.

The subhuman monster assembled by its creator from body parts of various human corpses took on the name of its maker, and then sought to destroy him. The novel added a word to the English lexicon. A “Frankenstein” is any creation that ultimately destroys its creator. History tells us that our track record is mixed in this regard. Human creations are the source of both good and evil, but every voice should not have the same volume lest we become like Frankenstein. Just look at the violence through which some in our culture strive to eradicate our Creator.

Truthseeking AI

Writing for The Wall Street Journal (April 29-30, 2023) technology columnist Christopher Mims described the primary source for artificial intelligence in “Chatbots Are Digesting the Internet” : “If you have ever published a blog, or posted something to Reddit, or shared content anywhere else on the open web, it’s very likely you have played a part in creating the latest generation of artificial intelligence.”

Even if you have deleted your content, a massive database called Common Crawl has likely already scanned and preserved it in a vast network of cloud storage. The content by us or about us is organized and fed back to search engines with mixed results. In the process, AI programs itself and can do so with as much preconceived bias as its original human sources. You have likely already contributed to the content that artificial intelligence programs are now organizing into this massive database.

Writing for The Wall Street Journal on April 1, 2023, popular columnist Peggy Noonan penned a cautionary article entitled, “A Six-Month AI Pause?” Ms. Noonan raised several good reasons for pausing our already overly enthusiastic quest to create and liberate artificial intelligence. Her column generated several published letters to the editor calling for caution. One, by Boston technologist Afarin Bellisario, Ph.D warns:

“AI programs rely on training databases. They don’t have the judgment to sort through the database and discard inaccurate information. To remedy this, OpenAI relies on people to look at some of the responses ChatGPT creates and provide feedback. ... Millions of responses are disseminated without any scrutiny, including instructions to kill. ... People (or other bots) with malicious intent can corrupt the database.”

James MacKenzie of Berwyn, PA wrote that “The genie is out of the bottle. Google and Microsoft are surely not the only ones creating generic AI. You can bet [that] every capable nation’s military is crunching away.” Tom Parsons of Brooklyn, N.Y. raised another specter: “Ms. Noonan offers a compelling list of reasons to declare a moratorium on the development of AI. What are the odds that the Chinese government or other malign actors will listen?”

The potential for AI to be — or become — a tool for good is also vast. In medicine, for example, an AI system relies on the diagnostic skills of not just one expert, but “thousands upon thousands all working together at top speed,” according to The Wall Street Journal. One study found that physicians using an AI tool called “DXplain” improved accuracy on diagnostic tests by up to 84-percent. Some AI developers believe that AI should be allowed to learn just as humans learn — by accessing all the knowledge available to it.

In the “Personal Technology” column of a recent issue of The Wall Street Journal, Columnist Joanna Stern wrote, “An AI Clone Fooled My Bank and My Family” (April 29-30, 2023). Ms. Stern wrote about Synthesia, a tool that creates AI avatars from the recorded video and audio supplied by a client. After recording just 30 minutes of video and two hours of audio, Synthesia was ready to create an avatar of Joanna Stern that looked, sounded and acted convincingly like her. Then another tool called ElevenLabs, for a mere $5.00 per month, created a voice clone of Ms. Stern that fooled both her family and her bank. The potential for misuse of this technology is vast, not to mention alarming.

Photo by Daniel Oberhaus (2018)

Elon Musk Has a Better Idea

Elon Musk has been in the news a lot for his attempts to transform Twitter into a social media venue that gives all users an equal voice — and all points of view, within the bounds of law, an equal footing. He has been criticized for this by the progressive left which became accustomed to its domination of social media in recent years. For at least the last decade, Elon Musk has tried to steer the development of artificial intelligence. He was a cofounder of OpenAI, but stepped back when he denounced its politically correct turn left. ChatGPT evolved from OpenAI, but Musk warns of their potential for “catastrophic effects on humanity.”

In early 2023, Elon Musk developed and launched a venture called “TruthGPT” which he bills as “a truth-seeking Al model that will one day comprehend the universe.” Meanwhile, he has called for a six-month moratorium on the development of AI models more advanced than the latest release of GPT-4. “AI stresses me out,” he said. “It is quite dangerous technology.” He is now attracting top scientific and digital technology researchers for this endeavor.

According to a report in The Wall Street Journal, Mr. Musk became critical of 0penAI after the company released ChatGPT late in 2022. He accused the company of being “a maximum profit company” controlled by Microsoft which was not at all what he intended for OpenAI to become. He has since paused OpenAl’s use of the massive Twitter database for training it.

As a writer, I set out with this blog in 2009 to counter some of the half-truths and outright lies that had dominated the media view of Catholic priesthood for the previous two decades. From the first day I sat down to type, even in the difficult and limited circumstances in which I must do so, writing the unbridled truth has been my foremost goal. I am among those looking at the development of artificial intelligence with a wary eye, and especially its newest emanations, OpenAI and ChatGPT.

As I was typing this, a friend in Chicago sent me evidence that St Maximilian Kolbe, the other Patron Saint of this site, was deeply interested in both science and media. As a young man in the 1930s, he built a functioning robot. I was stunned by this because I did the same in the mid l960s. Maximilian Kolbe died for standing by the truth against an evil empire. I think he would join me today in my support for Elon Musk’s call for a pause on further development of AI technology, and for his effort to build TruthGPT.

Those who die for the truth honor it for eternity.

+ + +

“I know I’ve made some very poor decisions recently, but I give you my complete assurance that my work will be back to normal.”

— HAL 9000 to Mission Commander David Bowman after he regained control of the ship and began a total system shutdown of the AI computer

+ + +

Note from Fr Gordon MacRae: Thank you for reading and sharing this post. You may also like these related posts from Beyond These Stone Walls :

Saint Michael the Archangel Contends with Satan Still

The James Webb Space Telescope and an Encore from Hubble

Cultural Meltdown: Prophetic Wisdom for a Troubled Age by Bill Donohue

The Creation of Adam by Michelangelo (detail)

The Eucharistic Adoration Chapel established by Saint Maximilian Kolbe was inaugurated at the outbreak of World War II. It was restored as a Chapel of Adoration in September, 2018, the commemoration of the date that the war began. It is now part of the World Center of Prayer for Peace. The live internet feed of the Adoration Chapel at Niepokalanow — sponsored by EWTN — was established just a few weeks before we discovered it and began to include in at Beyond These Stone Walls. Click “Watch on YouTube” in the lower left corner to see how many people around the world are present there with you. The number appears below the symbol for EWTN.

Click or tap here to proceed to the Adoration Chapel.

The following is a translation from the Polish in the image above: “Eighth Star in the Crown of Mary Queen of Peace” “Chapel of Perpetual Adoration of the Blessed Sacrament at Niepokalanow. World Center of Prayer for Peace.” “On September 1, 2018, the World Center of Prayer for Peace in Niepokalanow was opened. It would be difficult to find a more expressive reference to the need for constant prayer for peace than the anniversary of the outbreak of World War II.”

For the Catholic theology behind this image, visit my post, “The Ark of the Covenant and the Mother of God.”